With the release of Python 3.9, the first of its kind

on the new annual release cadence, it is time once again for

Python Steering Council elections. This will be the third edition

of the Steering Council under the new governance model adopted by the Python

core developers after Guido retired as BDFL.

I have served on both previous Steering Councils, and am self-nominating for

a third term.

I have been deeply involved in Python since 1994. In fact, I learned that I

was the first non-Dutch contributor to Python! I attended the first

workshop (long before Pycons) back in November 1994 along with 20 other early

enthusiasts. I met Guido at that workshop; I've loved Python as a language

and community ever since, and I value Guido as a close personal friend.

Somehow, I was immortalized as the Friendly Language Uncle For Life (the FLUFL), and that being a

title that comes with no responsibilities, it's good to see that humor has

never really left the language named after a British comedy troop.

The Python Steering Council (SC) has an important constitutional role in the evolution of the language,

and works closely with the Python Software Foundation to ensure the ongoing

health and vibrancy of the larger Python community. The five members of the

SC meet weekly, and are usually joined by Ewa Jodlowska, the PSF's Executive Director.

Although not required by the bylaws, Ewa's attendance is highly valued by the

SC. She represents the PSF and graciously takes meeting notes, which

eventually get submitted to the SC's private git repo, and distilled into

public reports to the community. Occasionally we'll invite guests to come and

chat with us about various topics.

We have a fairly wide agenda. A core constitutional requirement is to

adjudicate on Python Enhancement …

Continue reading »

In December of 2019, Łukasz Langa reached out to me about a project he was

putting together to raise money at the Pycon 2020’s PyLadies auction in

April. He was asking a bunch of musical Pythonistas to contribute songs for

an exclusive custom vinyl album, a unique one-off sponsored by EdgeDB.

Łukasz was thinking that we might each do a cover song from a list of Guido's

favorites. The song was supposed to be between 2 and 5 minutes long, and the

deadline for submission was February 10th, 2020, my birthday. Of course, I

agreed to do it!

And of course, I promptly forgot about it.

Then on January 24th, I got a ping email from Łukasz asking how it was going.

“Oh, yeah, great Łukasz, really great!” I typed as I began to mildly panic.

So now I had about 17 days to think of a song to do, arrange it, track all the

parts, and mix it. I realized I hadn't gotten a list of songs from Guido,

couldn't ask for one now, and none of the covers I'd thought of really fit the

project. I was running out of time to get a band into a studio to record, so

it had to be something I could compose and perform myself.

Fortunately, I have a little home studio, and I had time on my hands, so I

decided I’d write an original song. And if you’re going to write some

original music that will be donated to the Python community, there’s only one

source material you can possibly choose from.

PEP 20, The Zen of Python

The Zen of Python is a well-known collections of aphorisms, koans that

describe the aesthetics of the Python language, as channeled by a famous early

and still …

Continue reading »

Here's a common Python idiom that is broken on macOS 10.13 and beyond:

import os, requests

pid = os.fork()

if pid == 0:

# I am the child process.

requests.get('https://www.python.org')

os._exit(0)

else:

# I am the parent process.

do_something()

Now, it's important to stress that this particular code sample may execute

just fine. It's an excerpt from some code at work that illustrates a deeper

problem that you may or may not encounter in real-world applications. We've

seen it crash reproducibly, resulting in core dumps of the Python process,

with potentially disk filling dump files in /cores.

In this article, I hope to explain what I know about this problem, with links

to information elsewhere on the 'net. Some of those resources include

workarounds, but in my experiments, those are not completely reliable in

eliminating the core dumps. I'll explain why I think that is.

It's important to stress that at the time of this article's publishing, I do

not have a complete solution, and am not even sure one exists. I'll note

further that this is not specifically a Python problem and in fact has been

described within the Ruby community. It is endemic to common idioms around

the use of fork() without exec*() in scripting languages, and is caused

by changes in the Objective-C runtime in macOS 10.13 High Sierra and

beyond. It can also be observed in certain "prefork" servers.

What is forking?

I won't go into much detail on this, since any POSIX programmer should be well

acquainted with the fork(2) system call, and besides, there are tons of

other good resources on the 'net that explain fork(). For our purposes

here, it's enough to know that fork() is a relatively inexpensive way to

make an exact copy of …

Continue reading »

I turn my back to the wind

To catch my breath,

Before I start off again

-- Neil Peart, Time Stand Still

23 years ago, I met Guido, fell in love with Python, and took a turn that

changed the course of my life and career. You can't ever know where your

decisions will lead you, so you just have to set a course and trust the wind.

In the 1990s, as we moved the Python.Org infrastructure from the Netherlands

to the US, we inherited the Majordomo list server that was running

python-list@python.org. There were changes we wanted to make and features

we wanted to add, but it was becoming increasingly clear that Majordomo had to

be replaced. Besides being difficult to hack on, it was written in Perl, so

of course that wouldn't do at all. Python was an upstart disrupting its

space, and we needed a list server written in Python that was malleable enough

for us to express our ideas.

It was about that time that our friend John Viega showed us a project he'd

been working on. He'd wanted to connect a popular, local Charlottesville,

Virginia band with their fans at UVA, and email lists were an important way to

do that. Of course, no one wants to manage such lists manually, and if you're

not happy with the existing options, what does any self-respecting developer

do? You write yourself a new one! As for implementation language, was there

really any other choice? History celebrates this momentous confluence of

events: the band became the mega-rock powerhouse known as the Dave Matthews

Band, and the mailing list software John wrote is what became GNU Mailman.

(Aside: I'll never forget the time my band the Cravin' Dogs played at TRAX,

a Charlottesville music venue. The place …

Continue reading »

Written by Barry Warsaw in music on Sun 05 March 2017. Tags: music,

Although I've been signed up for 8 years, 2017 is the first time I've

completed the RPM Challenge. The challenge is deceptively simple: record

an album in February that's 10 songs or 35 minutes long. All the material

must be previously unreleased, and it's encouraged to write the music in

February too.

The second question of the FAQ is key: "Is it cheating...?" and the answer is

"Why are you asking?".

RPM is not a contest. There are no winners (except for everyone who loves

music) and no prizes. I viewed it as a personal creative challenge, and it

certainly was that! As the days wound down, I had 9 songs that I liked, but I

was struggling with number 10. I was also about 4 minutes short. I'd

recorded a bunch of ideas that weren't panning out, and then on the last

Saturday of February, I happened to be free from gigs and other commitments.

Yet I was kind of dreading staring at an empty project (the modern musician's

proverbial empty page), when one of my best friends in the world, Torro Gamble

called me up and asked what I was doing. Torro's a great drummer (and guitar

player, and bass player...) so he came over and we laid down a bunch of very

cool ideas. One of them was perfect for song number 10.

The great thing about this challenge is the deadline. When you have a home

studio, there's little to stop you from obsessing about every little detail.

I can't tell you how many mixes I made, tweaking the vocals up a bit, then

bringing them back down. Or adding a little guitar embellishment only to bury

it later. And you don't even want to look at the comps of the dozens of bass

and vocal …

Continue reading »

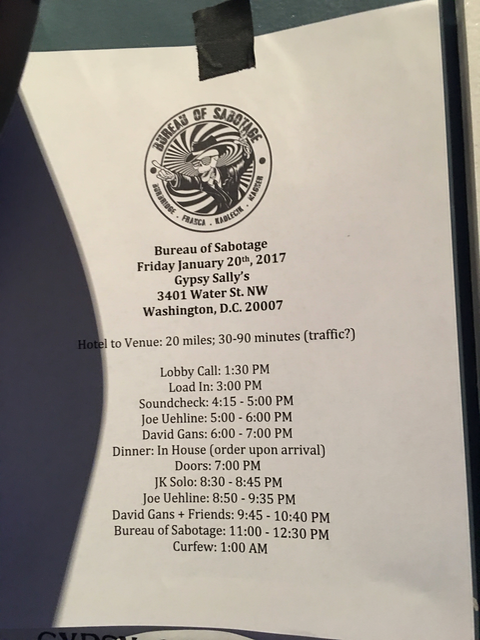

I'm always going to remember January 20, 2017 as a beautiful day.

Those who know me, and know the significance of that date, will no doubt

wonder if I've lost my mind. But let me explain why a day that could have

been filled with crushing physical and psychic depression, instead is not only

filled with light and love, but I think planted the seeds for what must

inevitably come next.

I'm writing this in a sort of quantum superposition. I haven't read or heard

any news since about 8pm last night. My wife and son are down at the Women's

March in Washington DC and I've woken late, had breakfast, done my morning tai

chi and meditation, and now I sit down to try to put some of my thoughts onto

"paper".

You probably know what else happened on that date. And if so, the question

is: why was last night so revitalizing, so positive? Because a trio of

U-Liners played an enthusiastic set as one act in a night of three, filled

with transcendent music and amazingly bright joyful people.

I'd thought the gig was going to be a night of protest against a new,

unthinkable political reality, and the message of hate and fear that came

along with it. But it wasn't a protest gig, it was a celebration.

The show was at Gypsy Sally's, a club with a great vibe down in Washington

DC's Georgetown area, under the Whitehurst Freeway. I've played there several

times with several different groups, including my main bands the Cravin'

Dogs and the U-Liners. Some places (musical and otherwise), just have a

Vibe. You can tell that magic happens there. The Barn at Keuka Lake where

I've attended tai chi camp has that vibe. All the hours and hours of

chi-filled …

Continue reading »

For a project at work we create sparse files, which on Linux and other

POSIX systems, represent empty blocks more efficiently. Let's say you have a

file that's a gibibyte in size, but which contains mostly zeros,

i.e. the NUL byte. It would be inefficient to write out all those zeros, so

file systems that support sparse files actually just write some metadata to

represent all those zeros. The real, non-zero data is then written wherever

it may occur. These sections of zero bytes are called "holes".

Sparse files are used in many situations, such as disk images, database files,

etc. so having an efficient representation is pretty important. When the file

is read, the operating system transparently turns those holes into the correct

number of zero bytes, so software reading sparse files generally don't have to

do anything special. They just read data as normal, and the OS gives them

zeros for the holes.

You can create a sparse file right from the shell:

$ truncate -s 1000000 /tmp/sparse

Now /tmp/sparse is a file containing one million zeros. It actually

consumes almost no space on disk (just some metadata), but for most intents

and purposes, the file is one million bytes in size:

$ ls -l /tmp/sparse

-rw-rw-r-- 1 barry barry 1000000 Jan 14 11:36 /tmp/sparse

$ wc -c /tmp/sparse

1000000 /tmp/sparse

The commands ls and wc don't really know or care that the file is

sparse; they just keep working as if it weren't.

But, sometimes you do need to know that a file contains holes. A common

case is if you want to copy the file to some other location, say on a

different file system. A naive use of cp will fill in those holes, so a

command like this …

Continue reading »

Way back in 2011 I wrote an article describing how you can build Debian

packages with local dependencies for testing purposes. An example would be a

new version of a package that has new dependencies. Or perhaps the new

dependency isn't available in Debian yet. You'd like to test both packages

together locally before uploading. Using sbuild and autopkgtest you can

have a high degree of confidence about the quality of your packages before you

upload them.

Here I'll describe some of the improvements in those tools, and give you

simplified instructions on how to build and test packages with local

dependencies.

What's changed?

Several things have changed. Probably the biggest thing that simplifies the

procedure is that GPG keys for your local repository are no longer needed.

Another thing that's improved is the package testing support. It used to be

that packages could only be tested during build time, but with the addition of

the autopkgtest tool, we can also test the built packages under various

scenarios. This is important because it more closely mimics what your

package's users will see. One thing that's cool about this for Python

packages is that autopkgtest runs an import test of your package by

default, so even if you don't add any explicit tests, you still get

something. Of course, if you do want to add your own tests, you'll need to

recreate those default tests, or check out the autodep8 package for some

helpers.

I've moved the repository of scripts over to git.

The way you specify the location of the extra repositories holding your local

debs has changed. Now, instead of providing a directory on the local file

system, we're going to fire up a simple Python-based HTTP server and use that

as a new repository URL. This won't be …

Continue reading »

In early September 2016 I traveled to New Zealand to give a keynote

speech for Kiwi PyCon 2016. I was very honored to be invited, and glad that

after 4 years of valiant resistance, I finally gave in to my thankfully

diligent colleague Thomi Richards. My wife Jane and I made the 25+ hour

journey, and had a wonderful little vacation after the conference.

Here in part I, I'll talk a bit about the conference and my keynote. Later in

part II, I'll talk about the vacation part of the trip. I might sprinkle

little impressions of New Zealand throughout both articles.

Getting there

In some ways, it's a good thing that New Zealand is so far from the USA, with

an additional transcontinental trip away from the east coast. During our

summer, it's their winter and they are 16 hours ahead of UTC-4. Meaning that

at noon New York time, it's 4am the next day in New Zealand. Or, to put it

another way, 8am New Zealand time is 4pm New York time the previous day. I

never got tired of the joke with family back home that we were living in the

future over there.

So it's definitely a commitment to get there, which makes it all the more

breathtaking I think. In a way, New Zealand feels exotic because the first

thing that most Americans probably think of when they hear "New Zealand" is

the "Lord of the Rings" movies. But New Zealand is a high-tech country,

with a low population (under 5 million people in total) concentrated in four

major cities (Auckland, Christchurch, Dunedin, and Wellington), lots of

sheep and some of the nicest people you'll meet in the English speaking world.

They have a real sense of stewardship for their land and environment, as

getting …

Continue reading »

I'm back! This time, I'm using the very awesome Pelican publishing platform,

as Blogger just got to be too much of a pain to use. Let's hope that the

simplicity of using reStructuredText, static pages, and outsourcing

discussions will make it so easy to blog that I actually keep doing it. I've

slapped together a basic theme, but no doubt I'll be tweaking it as time goes

on. For now, the theme isn't in a public repository. A quick shout out to

Font Awesome and font-linux for their very cool font icons.

I do intend to expand the themes I blog about. I'll still focus on Python

and GNU Mailman as well as other technology, but now I'll include tai chi,

music, and anything else that I feel the overwhelming urge to share. I hope I

can continue to present my thoughts in a respectful, positive, all-inclusive

voice.

I've migrated most of the pages from the old Blogger platform, and done some

minor updating as appropriate. One of the main reasons I've moved off of

Blogger was because of the pain of moderating comments. There was just too

much spam. I've switched to the third party discussion platform Disqus and

I've tried to import all the non-spammy original comments. I'm not sure I've

done that correctly, but we'll see!

You can contact me via the Social links in the side bar, and via the various

public mailing lists and IRC channels I hang out in. I hope to hear from you!